“Theory without practice is empty, but equally, practice without theory is blind." ---- I. Kant

Hello! My name is Jingjing Zheng (she/her). My current research interests include efficient training/inference of large models grounded in theory, low-rank/sparse representation learning with applications to AI efficiency, safety & reliability of LLMs under resource constraints. My academic background spans art and design (B.A.), mathematics (M.S. and current Ph.D.), and computer science (completed Ph.D. degree).

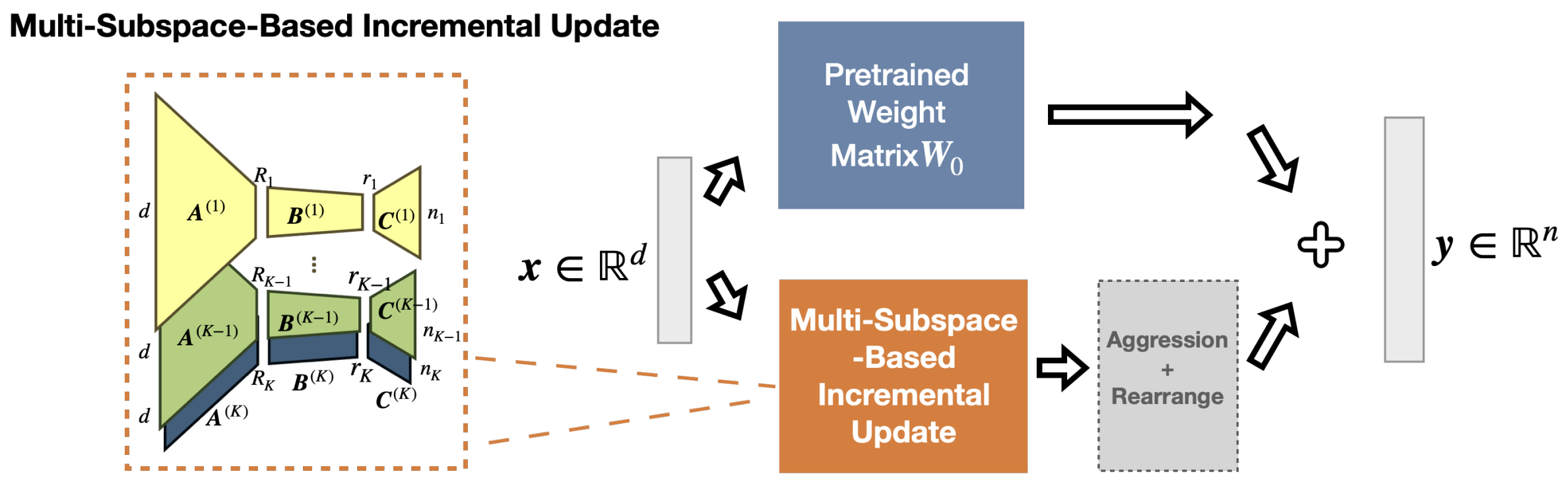

Since 2023, I have been pursuing my doctoral studies in Mathematics at the University of British Columbia , under the supervision of Prof. Yankai Cao . In Summer 2024, I undertook a visiting research internship at the Zero Lab, Peking University , where I worked with Prof. Zhouchen Lin on topics related to low-rank-based efficient fine-tuning of large models. In Summer 2025, I co-founded GradientX Technologies Inc., a startup focused on building the next generation of personalized financial intelligence.

Recent News

- [2025] Joined the Organizing Committee of Women and Gender-diverse Mathematicians at UBC (WGM).

- [2025–2026] Appointed to the UBC Green College Academic Committee.

- [2025] Our startup GradientX was selected for the Lab2Market Validate Program(Funded).

- [2025] Two papers accepted to NeurIPS 2025 (see you in San Diego!).

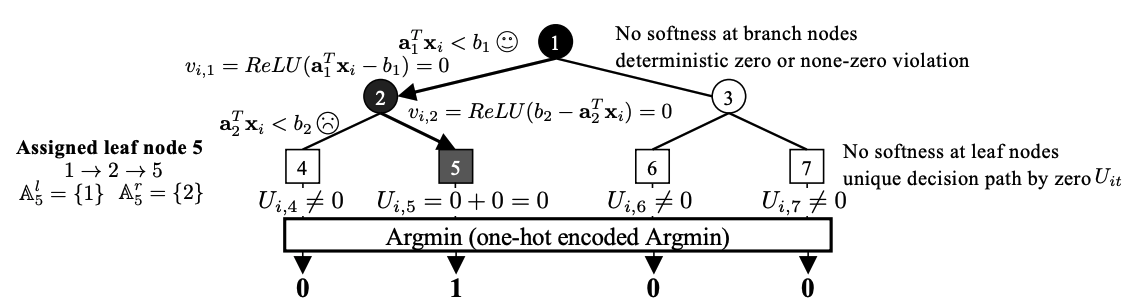

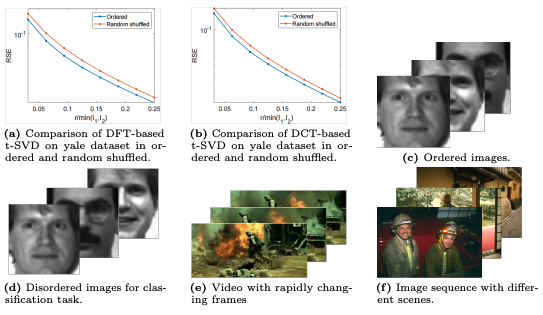

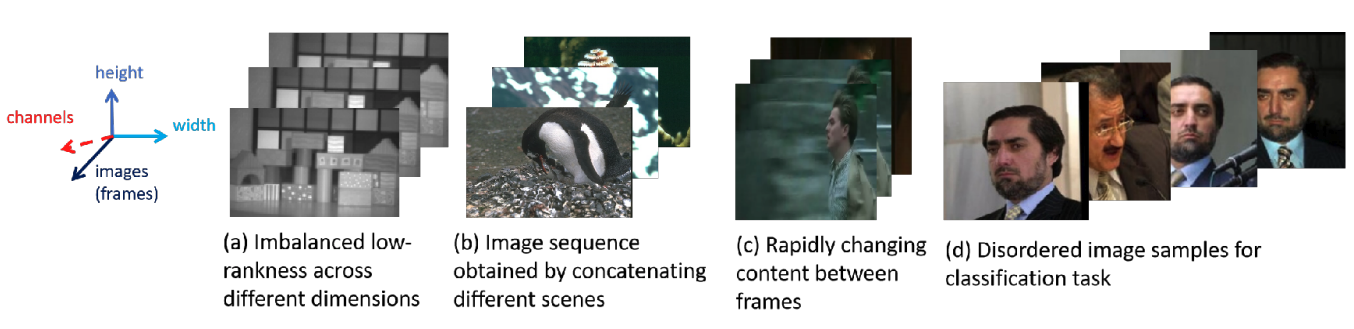

Latest Projects